Version: Sage 100cloud Premium 2020 (Version 6.20.0.01)

Additional software: Scanco Operations Management & Multi-bin, Some custom DSD programming

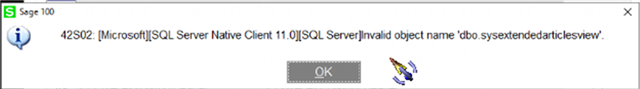

Hello all! First post here. Looking for some assistance when it comes to replicating data for reporting. We currently have around 18+ companies running on (at the moment) 4 different installations / environments on separate servers. That means separate application and DB servers for each environment. Naturally, this is an issue when it comes to consolidated reporting. We currently have SQL Server Transactional Replication configured for each production company in the respective environment. The data for each production company is being replicated to one reporting SQL Server. We have noticed that this interferes with certain utilities (i.e. Delete and Change Customers, Delete and Change Items) and renitializing data files. I have added screenshots of the errors we get for those. Here are some high level questions:

- What are the best practices when it comes to using SQL Server Transactional replication as it relates to our version of Sage?

- Will this interfere with normal Sage Application logic and / or cause corruption?

- Are there alternative solutions that can be deployed in house using SQL server tools or other methods?

- If not, what are recommended additional solutions?

Any and all help is appreciated!